When you encode behavioral traits as numerical parameters, LLMs interpret them reliably enough to run genetic algorithms on agent populations. An agent with the traits of Curiosity 85%, Greed 15%, Caution 75%, behaves measurably differently than one with Curiosity 20%, Greed 90%, Caution 10%. These numerical traits can function as genes in an evolutionary system.

Traits themselves act as genes while their numerical strengths function like epigenetic expression levels. An agent's genetic string might be C85,G15,Ca75, where each trait and its strength combine to form the expressed phenotype. The system optimizes how strongly each one influences behavior. Over generations, agents can converge on phenotypes that balance trait combinations that are ideal for their given environment.

The approach involves spawning a population of agents with different genetic profiles that compete for survival. Their responses are judged, and those scores create the fitness pressure. Low performing trait combinations die off, while high performers reproduce, passing mutated versions of their traits to offspring. Over generations, the population evolves toward trait configurations that succeed in their environment.

It's not quite a text-based prompt optimization framework like DSPy, GEPA or EvoPrompt, which evolve the prompt text itself through iterative feedback loops done over the instructions/system prompt/context. Those approaches generate, evaluate, and improve prompts themselves. Here, the genetic prompt string stays a fixed 10-20 tokens, and only numerical parameters change. That said, these approaches can coexist. Parameterized behavioral traits can sit next to a larger system prompt that's separately optimized by something like DSPy.

Deterministic Control Through Numeric Parameters

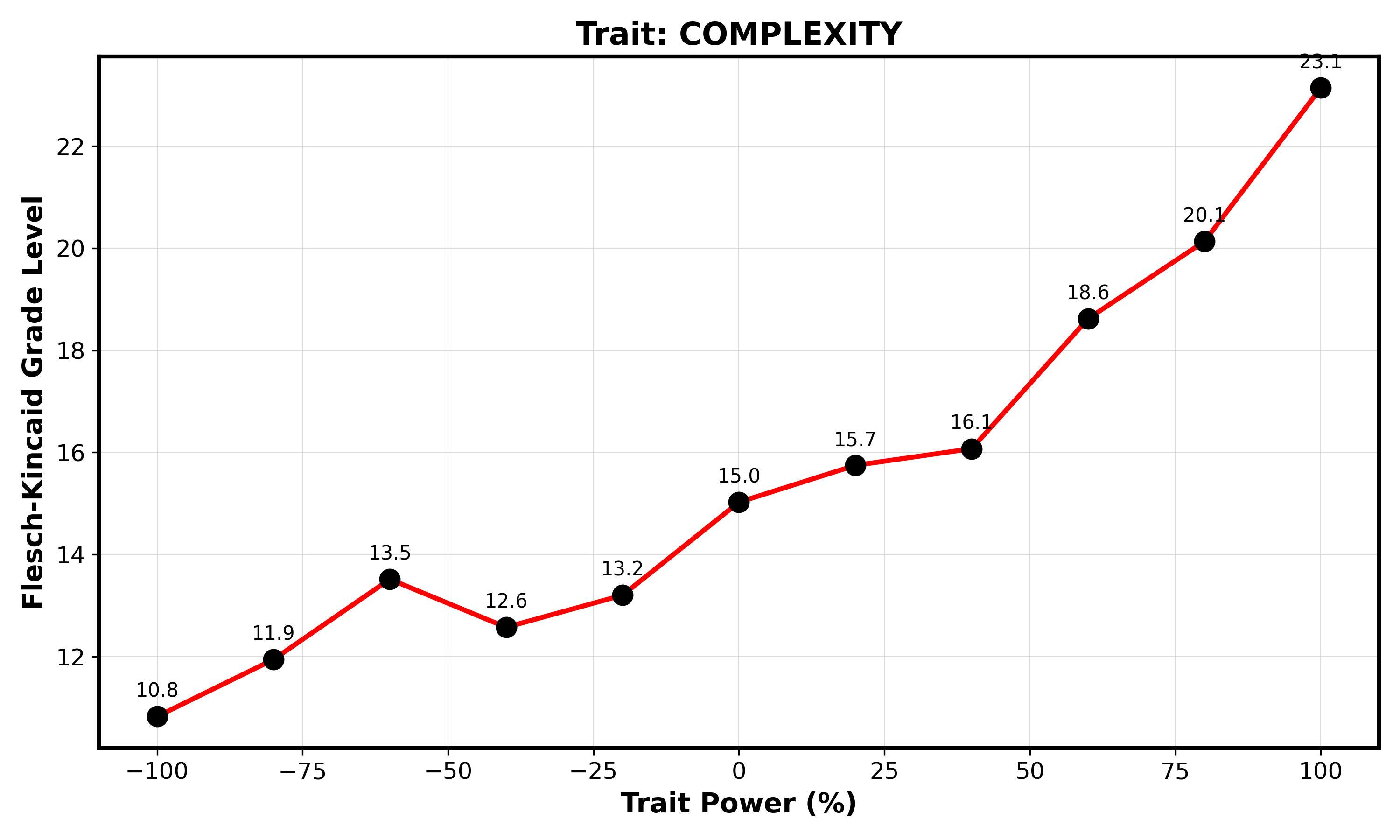

The entire approach depends on a simple question, can LLMs reliably interpret numerical trait values and adjust their behavior accordingly? If you tell an agent "Complexity: 20%" versus "Complexity: 80%", does it actually produce measurably different outputs?

Testing this with the complexity trait shows a clear linear relationship. As the trait parameter increases from -100% to 100%, the linguistic complexity of the agent's output (measured by Flesch-Kincaid grade level) rises predictably from about 11th grade reading level to graduate-level complexity. The LLM interprets the number, understands what it means, and adjusts its behavior accordingly.

This interpretability is what makes genetic algorithms viable for LLM behavioral control. You get deterministic, numeric handles on otherwise fuzzy concepts. No prompt generation required. We're evaluating outputs, not generating new prompts.

The fact that LLMs can reliably map numbers to behavioral attributes means the genetic system can explore the space systematically.

Biomimicry as Design Pattern

Eval workflows should ideally affect agents directly rather than needing a developer to adjust prompts. Genetic algorithms can help close that loop, where eval scores become fitness functions, and low-performing agents die off, and high-performing agents reproduce.

Evals inform the fitness function, so if you're scoring agents on task completion those scores can control which trait combinations persist from one generation to the next. Breeding propagates successful trait combinations while low scoring combinations die off, allowing effective combinations to spread through the population. Mutation explores the space through small random changes to trait values, and these mutations help the system explore and find these pockets of the fitness landscape.

The attribute strings are analogous to genes, and their numerical strength represents the epigenetics, the expression levels of genes, so phenotypes then are represented by the final assembled genentic string and their realized behaviors. This creates a self-balancing system. If your eval captures what actually matters, the population will drift toward agents that perform well on those metrics.

To test whether this actually works, I built an artificial life simulation where a population of agents with random trait values compete in an agricultural survival environment. The setup creates selection pressure through resource scarcity and time constraints.

The environment is a 10x10 grid where tiles have different fertility levels. Some tiles yield 12 food units per harvest, others only 1-2. Agents can check a tile's fertility before planting, but checking costs precious action time. Each agent gets a limited number of actions per turn, forcing constant trade-offs. Do they spend actions checking fertility or just plant blindly and hope for the best? Did they keep claiming more territory, or did they manage to harvest their ready crops?

Crops take 2 turns to mature, creating a near-term versus long-term planning tension. Plant now and you're investing in actions that won't pay off immediately. Harvest too late and you might starve before the next yield. Every agent consumes food each turn just to survive, plus they need to accumulate 20 food to reproduce before they hit the maximum age of 15 turns and die before reproducing.

These mechanics create an environment in which agent traits can directly influence survival. High caution agents might over-check fertility and starve from inaction. Low foresight agents might fail to plant enough crops for future turns. High greed without curiosity might lead to claiming bad territory. The traits that balanced these pressures survived and reproduced.

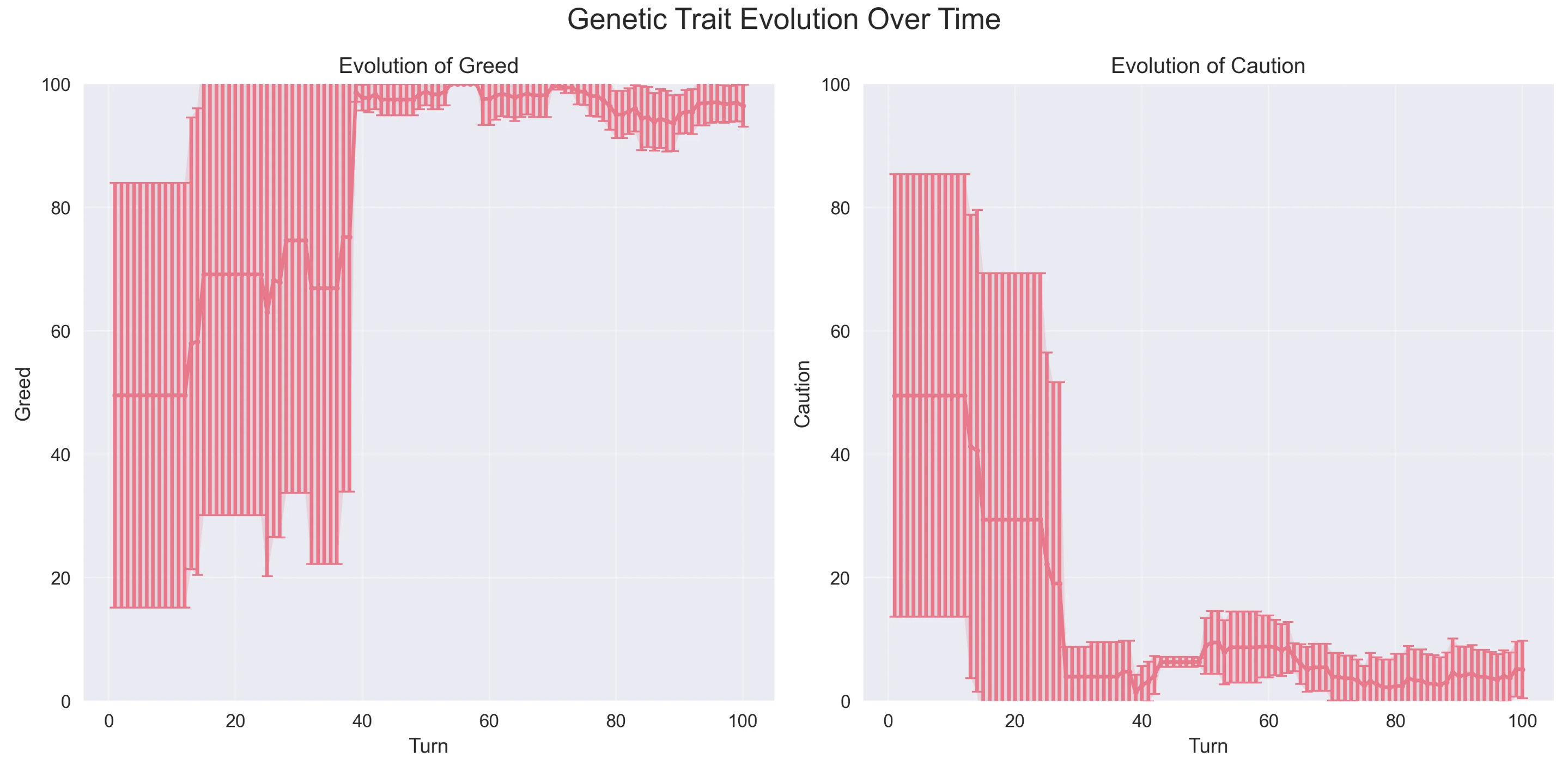

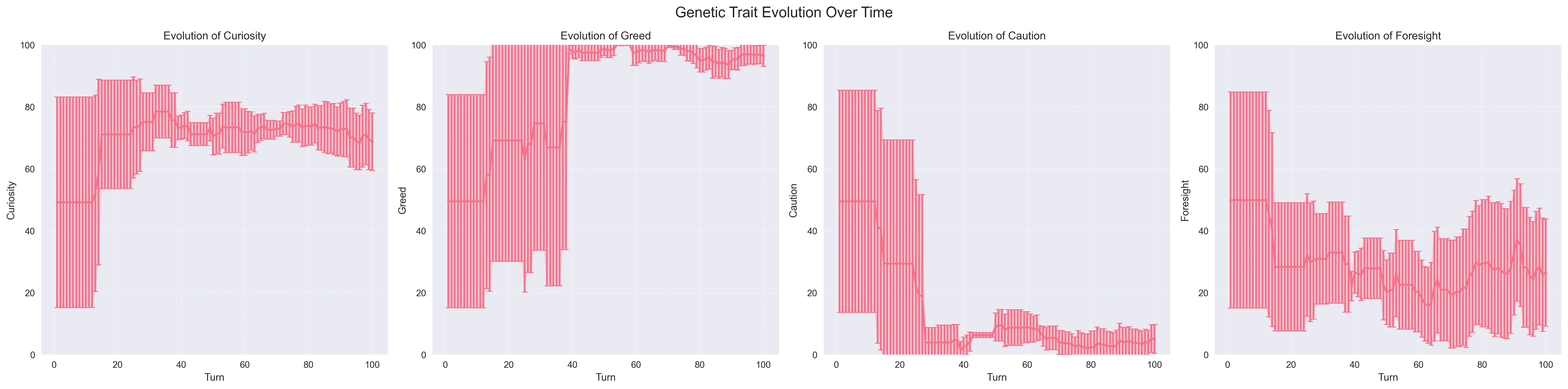

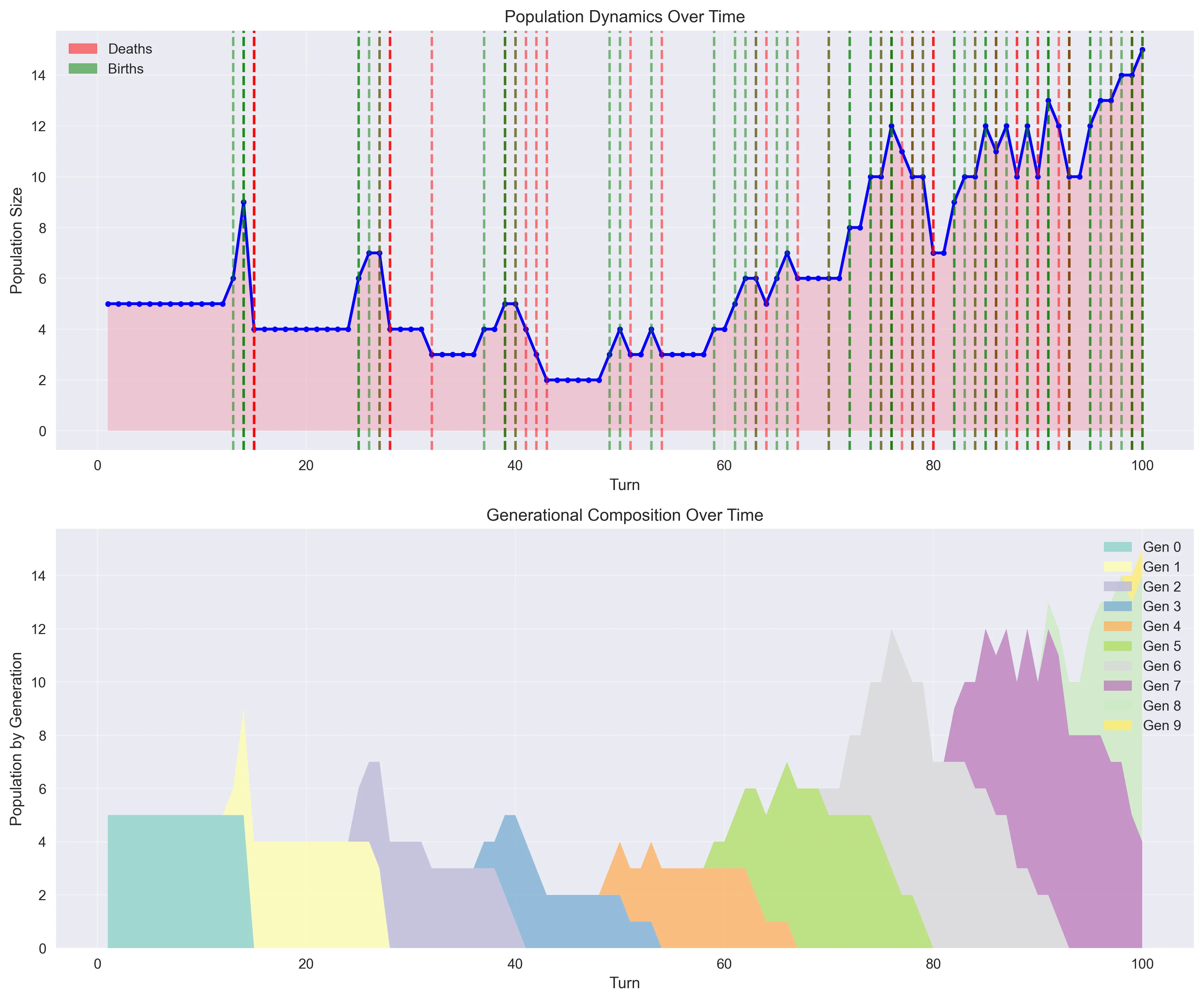

Over 100 turns, the genetics of the population evolved dramatically. From baseline at start, greed and curiosity increased, while caution and foresight fell. The agents converged on aggressive, immediate-focused strategies because that's what the environment rewarded. In the first 50 turns, growth was slow, and agents and their tiles were replaced more often than they were expanded upon. But something changed around turn 50, where the traits found a more optimized pattern, and growth began to take off, and by turn 100 they were on their way to filling the entire environment.

Population grew from 5 to 15 agents across 9 generations, and the successful lineages completely replaced the original population by the end of turn 100.

The interesting part is that I didn't program the winning strategies, they emerged from selection pressure. The genetic algorithm found an optimization path through the behavioral space.

Optimization Without Prompting

This system doesn't optimize large text-based system prompts, but rather it acts a behavioral steer, the string that influences strengths of relevant attributes for the agents environment. Genetic trait evolution is efficient in time and cost due to how those parameter strength values act as numeric handles, allowing us to use more deterministic GA logic. Mutation is simple arithmetic, and the selection is just a matter of comparing scores you already collected from evals.

If your agent population runs constantly and accumulates performance data, the genetic system can evolve in the background alongside it. Variations are tested through normal operation, and successful configurations naturally proliferate. It would be a bit like running a distributed search through behavioral space, where the selection pressure is informed by your evals pipeline.

Self-Optimization

In multi-agent systems where different behavioral profiles might suit different tasks, this type of self-optimization to its local environment means a web-browser agent might evolve different levels of curiosity or verbosity than an action-oriented issue-tracking agent would.

Llm-as-a-judge evals from Braintrust or user sentiment from services like Raindrop can provide access to fitness scores, so you could connect your eval system to a genetic breeding pipeline and let the population optimize itself.

Multiple populations could evolve for different sub-agents in a multi-agent system, where each agents population is shaped by its own fitness function. Or each user could have their own population that optimizes specifically to them.

The premise is simple enough, agents with traits that work well persist into future generations, and the ones that don't fade away. It's artificial natural selection.

Certain trait combinations produced more successful offspring, and those combinations spread through the population while the others died out. Agents that reached old age (surviving the full lifespan) had totally different trait profiles than agents that starved. High greed and low caution basically predicted survival up to turn 100 in this specific artificial environment. The genetic system discovered this correlation through variation and selection, and the genetic profile of the population changed pretty dramatically by the end of the simulation.

While this experiment isn't necessarily designed for application, I believe principles from genetic algorithms and alife will help us build systems that find optimum behavioral configurations and continuously adapt as the environment or requirements change. Evals can behave as selection pressure on variants.

Evolution is ultimately an algorithm for optimizing complex systems under uncertain conditions. Applying it to LLM behavioral control feels like a natural fit, and one worth exploring further.